Databricks AI/BI: What It Is & Why Enterprises Should Care

In the world of data, modern enterprises wrestle with three big challenges: speed, accuracy, and usability. You want insights fast, you want them reliable, and you want non‐technical people (execs, marketers, operations) to be able to get value without depending constantly on data engineers.

That’s where Databricks AI/BI comes in—a newer offering from Databricks that blends business intelligence with AI so that insights become more accessible, real‐time, and trustworthy.

What is Databricks AI/BI?

Databricks AI/BI is a product suite that combines a low-code / no-code dashboarding environment with a conversational interface powered by AI. Key components include:

- AI/BI Dashboards: Allows users to create interactive dashboards and visualizations, often using drag-and-drop or natural-language prompts. The dashboards integrate with Databricks’ SQL warehouses and the Photon engine for high performance.

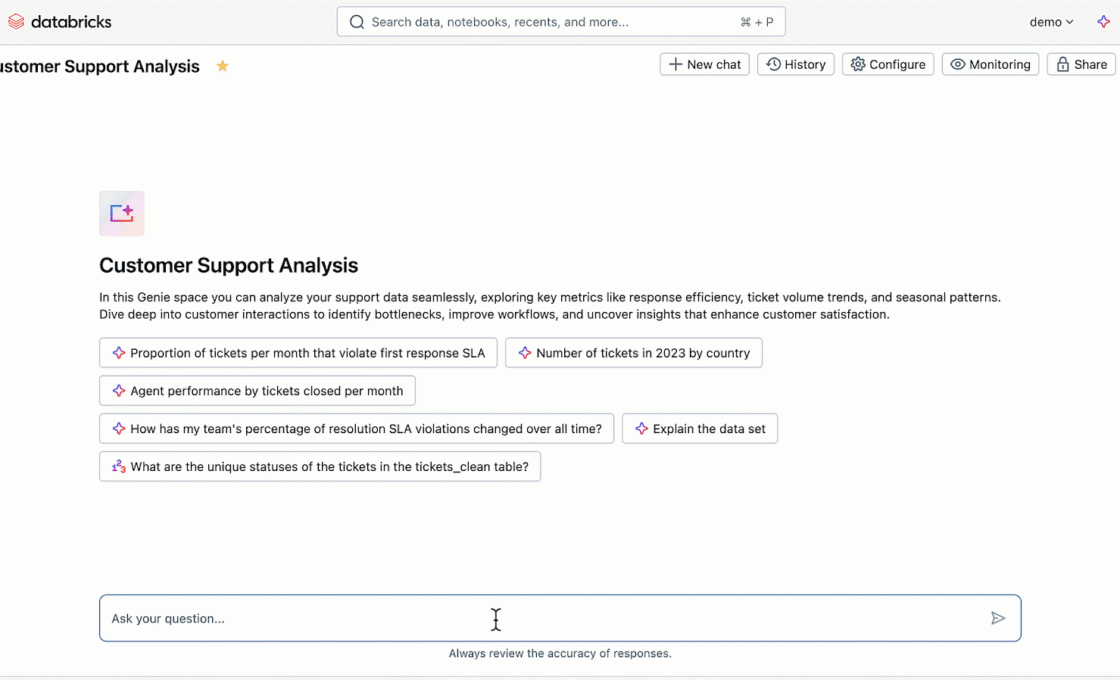

- Genie: A conversational, generative-AI interface where users can ask questions in natural language, get responses in visuals or SQL, dig deeper through follow-ups, get suggested visualizations, etc. It learns over time via usage and feedback.

- Built on top of Unity Catalog, which handles governance, lineage, permissions. This ensures that all dashboards or responses are trustable and auditable.

- Native integration with Databricks’ data platform (SQL warehouses, Photon engine, etc.), so enterprises don’t need to extract data elsewhere for BI. This improves freshness, lowers duplication and simplifies management.

Databricks Genie

AI/BI Genie uses a compound AI system rather than a single, monolithic AI model.

Matei Zaharia and Ali Ghodsi, two of the founders of Databricks, describe a compound AI system as one that “tackles AI tasks using multiple interacting components, including multiple calls to models, retrievers, or external tools.”

Use Cases: How Enterprises Are Using AI/BI

Here are some of the ways enterprises are applying it, or can apply it:

- Ad-hoc investigations of customer behaviour

Business users (marketing, product) can use Genie to ask questions like “Which customer cohorts churned in last quarter?” or “How did a campaign perform in region X vs Y?”, without waiting for engineers to build SQL pipelines. - Operational dashboards for teams

For operations, supply chain, finance etc., dashboards that update frequently, with interactive filtering, cross-visualization slicing, giving teams real-time monitoring. - Reducing the BI backlog and bottlenecks

When data teams are overwhelmed by requests for new dashboards, having tools that enable business users to do more themselves frees up engineering to focus on more strategic work (data pipelines, ML etc.). - Governance and compliance

Enterprises in regulated industries (finance, healthcare, etc.) need traceability: where data came from, who used it, what transformations it passed through. With Unity Catalog lineage + trusted assets in Databricks, AI/BI supports that. - Data democratization

Spreading data literacy: by lowering the barrier, a wider set of users can explore, ask questions, derive insights. This builds a data culture. - Integration with ML / AI workflows

Because it’s on Databricks, it’s easier to connect dashboards & conversational insights with predictive models, possibly bringing in forecasts, anomaly detection etc., or even embedding BI into AI‐powered apps.

Comparison

| Feature | Databricks AI/BI + Genie | Tableau Ask Data | Power BI (with Copilot / Q&A) |

|---|---|---|---|

| Parent Platform | Databricks Lakehouse (unified data, AI & BI) | Tableau / Salesforce ecosystem | Microsoft Fabric / Power Platform |

| Core Vision | Unify data, AI, and BI in one governed Lakehouse. BI happens where data lives. | Simplify visualization creation via natural language. | Infuse Copilot into all Microsoft tools — including BI — for everyday productivity. |

| AI Layer | Genie – a generative AI agent trained on enterprise data, governed by Unity Catalog. | Ask Data – NLP-based query translation for Tableau data sources. | Copilot / Q&A – GPT-powered natural language for Power BI datasets, integrated into Fabric. |

| Underlying Data Model | Databricks SQL Warehouse (Photon Engine) – operates directly on Lakehouse data (no extracts). | Extract-based (Hyper engine) or live connection to relational DBs. | Semantic Model / Tabular Dataset inside Power BI Service. |

| Governance | Strong – via Unity Catalog (data lineage, permissions, certified datasets). | Moderate – uses Tableau permissions and data source governance. | Strong – via Microsoft Purview + Fabric unified governance. |

| User Experience | Conversational (chat-style) + dashboard creation. Unified with AI/BI dashboards. | Type queries in Ask Data → generates visual. Embedded inside Tableau dashboards. | Ask natural language inside Power BI (Q&A) or use Copilot to auto-build visuals/reports. |

| Performance | Very high (Photon vectorized execution). Real-time queries on raw or curated data. | Depends on extract refresh or live connection. | Excellent on in-memory Tabular Models; limited by dataset size. |

| AI Customization | Uses enterprise metadata from Unity Catalog; can fine-tune prompts with context. | Limited NLP customization (no fine-tuning). | Some customization using “synonyms” and semantic model metadata. |

| Integration with ML/AI Models | Natively integrated (Lakehouse supports MLflow, feature store, LLMOps). | External ML integration (via Salesforce Einstein or Python). | Integrated via Microsoft Fabric + Azure ML. |

| Ideal User Persona | Enterprises already in Databricks ecosystem (data engineers, analysts, PMs, CXOs). | Business analysts and Tableau users who want easier visual exploration. | Office 365 / Azure enterprises seeking seamless Copilot-powered analytics. |

Conclusion

Databricks AI/BI is a powerful step forward in the evolution of enterprise analytics. It blends BI and AI so that enterprises can move faster, more securely, and more democratically with their data.

All three tools represent the evolution of Business Intelligence toward “AI-Native BI.” But here’s the philosophical difference:

- Tableau → still visualization-first, AI as a helper.

- Power BI → productivity-first, AI as a co-pilot.

- Databricks → data-first, AI as the core intelligence layer that unifies data, analytics, and governance.

For organizations that already use Databricks or are building a data lakehouse / unified analytics platform, AI/BI offers a way to deprecate some complex pipelines, reduce their BI backlog, bring more teams into analytics, while maintaining governance and performance.

References:

https://learn.microsoft.com/en-us/azure/databricks/genie

https://atlan.com/know/databricks/databricks-ai-bi-genie