Shift-Left, Shift-Right: The Twin Strategies Powering Modern IT and Data Operations

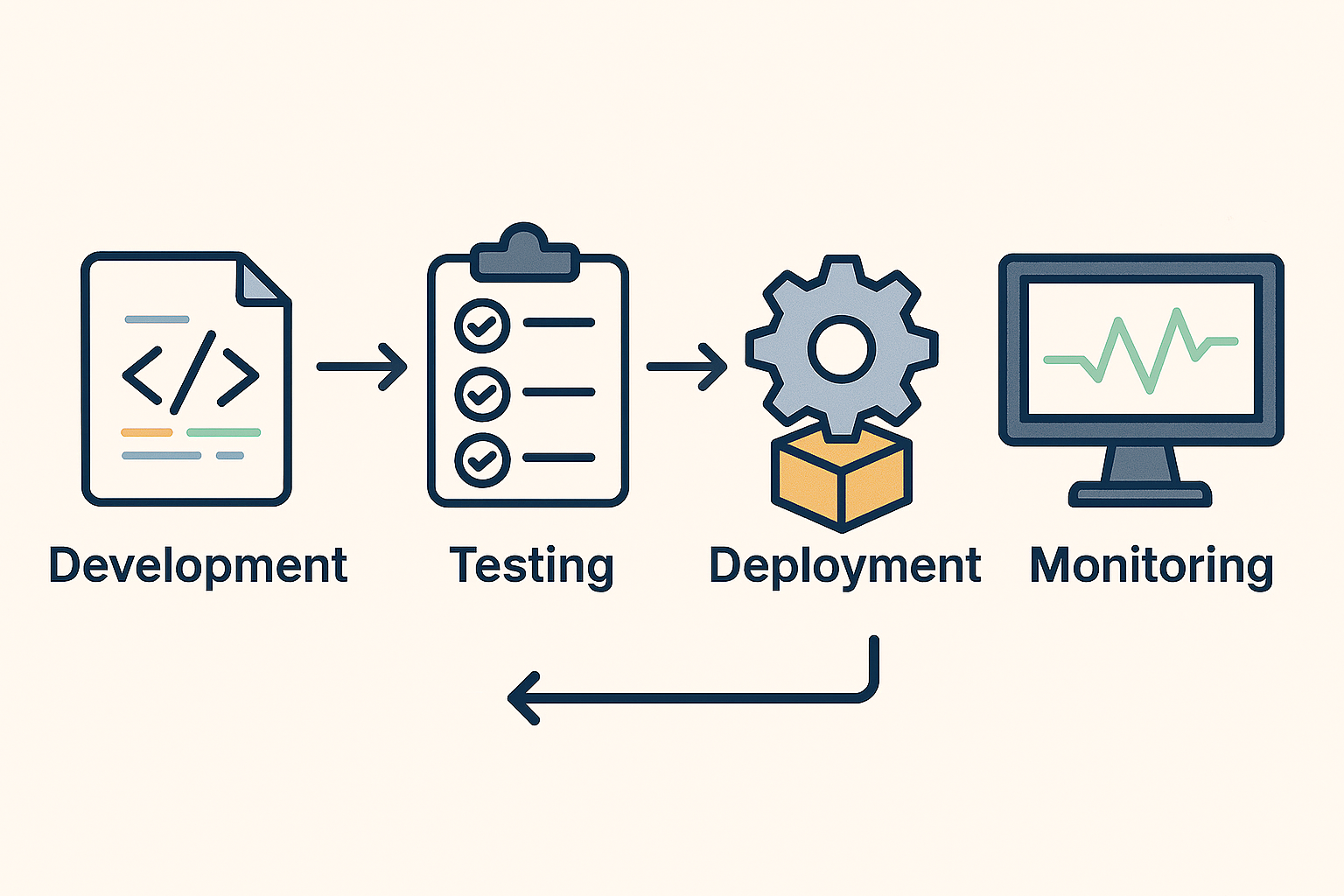

In today’s always-on digital enterprises, downtime and performance issues come at a steep cost. The modern DevOps philosophy has redefined how organizations build, test, deploy, and manage software and data systems. Two terms: Shift-Left and Shift-Right capture this evolution perfectly.

These approaches are not just technical buzzwords; they represent a cultural and operational transformation from reactive troubleshooting to proactive prevention and continuous improvement.

1. What Does “Shift-Left” Mean?

“Shift-Left” is all about moving quality and risk management earlier in the lifecycle, to the “left” of the traditional project timeline.

Historically, teams tested applications or validated data after development. By that stage, identifying and fixing issues became expensive and time-consuming.

Shift-Left reverses that by embedding testing, data validation, and quality assurance right from design and development.

Real-world example:

- Microsoft uses Shift-Left practices by integrating automated unit tests and code analysis in its continuous integration (CI) pipeline. Each new feature or update is tested within minutes of being committed, drastically reducing post-release defects.

- In a data engineering context, companies like Databricks and Snowflake promote Shift-Left Data Quality – validating schema, freshness, and business rules within the pipeline itself before data lands in analytics or AI systems.

Why it matters:

- Reduces defects and rework

- Improves developer productivity

- Speeds up deployment cycles

- Builds confidence in production releases

2. What Does “Shift-Right” Mean?

“Shift-Right” extends testing and validation after deployment, to the “right” of the timeline. It’s about ensuring systems continue to perform and evolve once they’re live in production.

Rather than assuming everything works perfectly after release, Shift-Right emphasizes continuous feedback, monitoring, and learning from real user behavior.

Real-world example:

- Netflix uses Shift-Right principles through its famous Chaos Engineering practice. By intentionally disrupting production systems (e.g., shutting down random servers), it tests the resilience of its streaming platform in real-world conditions.

- Airbnb runs canary deployments and A/B tests to validate new features with a subset of users in production before a global rollout – ensuring a smooth and data-driven experience.

Why it matters:

- Improves reliability and resilience

- Enables real-time performance optimization

- Drives continuous learning from production data

- Enhances customer experience through fast iteration

3. When Shift-Left Meets Shift-Right

In modern enterprises, Shift-Left and Shift-Right are not opposites – they’re complementary halves of a continuous delivery loop.

- Shift-Left ensures things are built right.

- Shift-Right ensures they continue to run right.

Together, they create a closed feedback system where insights from production feedback into design and development creating a self-improving operational model.

Example synergy:

- A global retailer might Shift-Left by embedding automated regression tests in its data pipelines.

- It then Shifts-Right by using AI-based anomaly detection in production dashboards to monitor data drift, freshness, and latency.

- Insights from production failures are looped back into early validation scripts closing the quality loop.

4. The AI & Automation Angle

Today, AI and AIOps (AI for IT Operations) are supercharging both shifts:

- Shift-Left AI: Predictive code scanning, intelligent test generation, and synthetic data generation.

- Shift-Right AI: Real-time anomaly detection, predictive incident management, and self-healing automation.

The result? Enterprises move from manual monitoring to autonomous operations, freeing up teams to focus on innovation instead of firefighting.

The future of enterprise IT and data operations isn’t about reacting to problems – it’s about preventing and learning from them continuously.

“Shift-Left” ensures quality is baked in early; “Shift-Right” ensures reliability is sustained over time.

Together, they represent the heart of a modern DevOps and DataOps culture — a loop of prevention, observation, and evolution.